机器学习中对抗性攻击的介绍和示例

来源:DeepHub IMBA 本文约1000字,建议阅读5分钟

本文为你展示微小的变化如何导致灾难性的影响。

概念

实际操作示例

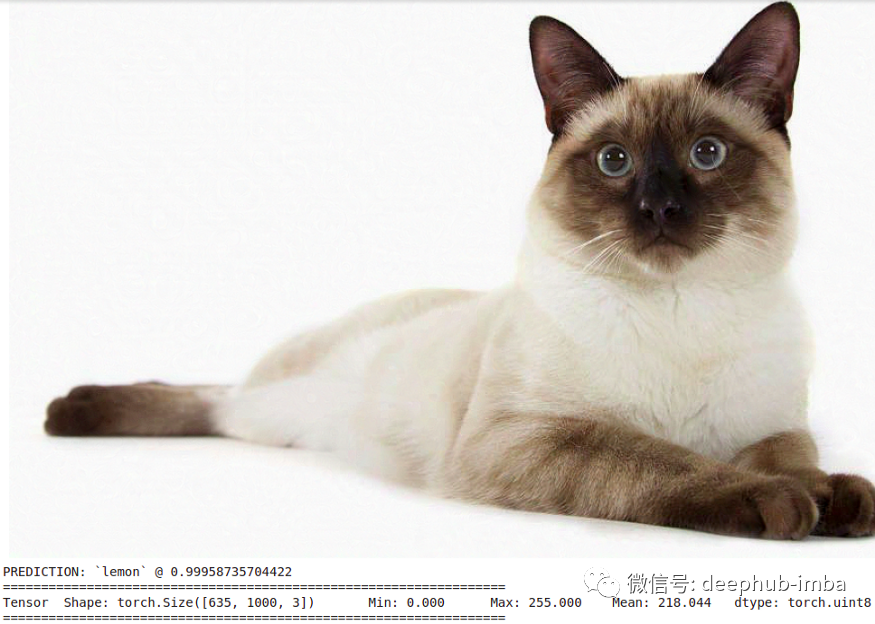

def predict_on_image(input):model.eval()show(input)input = image2tensor(input)pred = model(input)pred = F.softmax(pred, dim=-1)[0]prob, clss = torch.max(pred, 0)clss = image_net_ids[clss.item()]print(f'PREDICTION: `{clss}` @ {prob.item()}')

from tqdm import trangelosses = []def attack(image, model, target, epsilon=1e-6):input = image2tensor(image)input.requires_grad = Truepred = model(input)loss = nn.CrossEntropyLoss()(pred, target)loss.backward()losses.append(loss.mean().item())output = input - epsilon * input.grad.sign()print(input.grad.sign())output = tensor2image(output)del inputreturn output.detach()modified_images = []desired_targets = ['lemon']for target in desired_targets:target = torch.tensor([image_net_classes[target]])image_to_attack = original_image.clone()for _ in trange(10):image_to_attack = attack(image_to_attack, model, target)modified_images.append(image_to_attack)

最后总结

https://openai.com/blog/adversarial-example-research/

评论